AI-powered Robots Open Up an Underwater World

Dr. Englot operating a VideoRay ROV on Manhattan’s Pier 26 at the 2016 SUBMERGE New York City Marine Science Festival. (Credit: Stevens Institute of Technology)

Researchers from Stevens Institute of Technology are training AI-powered robots to adapt to the unpredictable conditions of life under the ocean’s surface using algorithms and machine learning. Their immediate goal is to enable these robots to inspect underwater infrastructure autonomously, reducing risk to human divers and providing improved security. However, the possibilities for deploying AI-powered robots underwater are numerous and exciting.

A more accessible platform

Some of America’s most critical infrastructure—from undersea internet cables to oil and gas pipelines—is hidden underwater. This means that inspecting and protecting it can be a challenge, as can even remembering to do it frequently enough. Human divers who inspect infrastructure must be specialists, requiring training not only to dive safely with equipment but also to spot complex engineering problems.

Smart underwater robots might be able to take humans out of the equation if we can train them to get the job done. Brendan Englot, Professor of Mechanical Engineering at Stevens Institute of Technology, spoke with EM about several projects the team is working on, all surrounding a low-cost, mini ROV platform called the blue ROV.

“The blue ROV has really been a disruptive new technology because the price point for working with autonomous underwater vehicles used to limit participation to the few who could afford a platform that was 30 to 50 thousand dollars at a minimum,” explains Dr. Englot. “The blue ROV platform is only about 2,500 dollars. It’s really geared toward open source sharing of hardware and software.”

Dr. Englot and the team began working with the blue ROV platform about a year ago, and they are now developing algorithms for it to address a few different topics.

“One of the main applications that we’re interested in is accurate, autonomous inspection of subsea structures, spanning many different types of infrastructure,” details Dr. Englot. “The interest is just being able to increase the levels of automation and autonomy in subsea oil and gas operations so that divers and operators are not at risk to the same degree that they currently are.”

The team is also working on applications that would allow users to engage in persistent surveillance and inspection of the health and integrity of piers, bridges, ship hulls, and other underwater infrastructure.

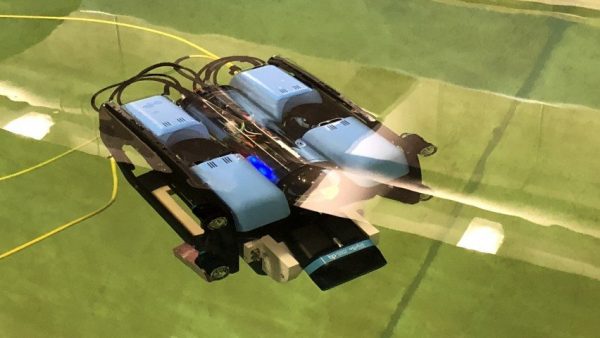

Blue ROV. (Credit: Stevens Institute of Technology)

Training robots to explore the ocean’s depths

Working to solve these exploratory problems has led the team to focus on two specific basic research problems.

“One is the problem of how you how you explore an unknown environment,” Dr. Englot describes. “Imagine you have to inspect a damaged pier. You know the geographical boundary of where it’s located, but maybe you don’t have a good prior model of it from before it was damaged, and you don’t know where all the structures are located, or where the obstacles are that the robot will have to avoid. The task for the robots is to be able to dive down within the designated boundaries you gave it, explore on its own, avoid collisions, and come back with a complete 3-D map of the structures contained in that area.”

The team is, therefore, developing algorithms that will help a robot explore an unknown environment more efficiently, and make decisions about how to map and how to use its sensor data to decide where to drive next as this evolving map is coming together.

The other basic research question the team is pondering is how the robots can tackle various kinds of uncertainty they encounter underwater without a perfect model describing how everything behaves.

“That uncertainty includes currents that are pushing the robot around,” remarks Dr. Englot. “We have some forecasting models for currents, but they’re not available at very high resolution; winds, waves, and other kinds of disturbances in the water, and the properties of the robot’s sensors also will vary depending on the conditions of the environment. You may not be able to see very well with a camera in certain areas due to the turbidity of the water. Your Acoustic Positioning System might not work very well in certain areas of the environment if the water’s very shallow or if or if there are lots of submerged structures surrounding the robot.”

The team’s answer to these problems? Building a machine learning framework the robot can use to learn how to maneuver in an environment where it lacks a perfect model—or has no model at all—and monitor the water parameters around it so it can learn.

“Right now, our robot is equipped mostly with perceptual sensors, so it’s able to measure its own motion relative to structures, or its motion relative to the surrounding water,” clarifies Dr. Englot. “It needs to know how fast it’s going, which is challenging to do in and of itself with high accuracy. But this also has the potential to incorporate measurements of the state of the environment as well and you know, we’ve thought about that. We haven’t performed research to date where we’re collecting measurements from the water itself, but many of the techniques that we’re using to do inspections and build maps of underwater structures could also probably be applied to mapping certain characteristics of the water in the desired region.”

Dr. Englot and an ROV. (Credit: Stevens Institute of Technology, https://www.stevens.edu/news/robust-robotics-safer-world)

One of the biggest challenges for underwater robots is situational awareness; these vehicles must be aware enough of everything around them to avoid causing damage, collisions, and unwanted interactions, as they move quickly and collect data. A machine learning process allows an AI to learn, and eventually get more information, even from grainy, poor quality images.

The underwater ROV of the future?

One promising application for this kind of AI-powered underwater vehicle is more persistent monitoring of underwater infrastructure, without the health and safety risks or financial costs that are currently associated with that kind of surveillance.

“They’re easily deployed,” states Dr. Englot. “And unlike a diver who might only be able to make it every six months, having robots at the ready, you could task them with inspecting and mapping something on demand.”

Autonomous patrolling also means randomized inspections, or data collection, if that’s the robot’s task.

“To whatever extent you can also have robots patrolling for environmental monitoring, I think that’s another application,” comments Dr. Englot. “These robots might also be able to help handle oil spills and other emergencies.”

Of course, challenging problems remain before these little AI-powered robots can realize their full potential; underwater wireless communication is a particular problem.

“There would have to be some way that the robots could maybe dock that upload their data provided to the human supervisor who could be located remotely,” adds Dr. Englot. “That continues to be one of the biggest challenges when you have a multi robot framework or a tether an untethered framework: getting the data to the people who need it is a tough problem.”

For now, though, the team will continue to train the robots and improve the algorithms, hopefully making infrastructure inspection safer and more common in the near future.

Top image: Dr. Englot operating a VideoRay ROV on Manhattan’s Pier 26 at the 2016 SUBMERGE New York City Marine Science Festival. (Credit: Stevens Institute of Technology)

0 comments